Introduction

The previous blog post described the results of recent tests of Faster Whisper for transcription accuracy in Transana 5.30. This post will use the same data set, focusing on the issue of transcription speed. The issue of transcription speed is more complex and nuanced than that of accuracy, as there are more factors that influence the results.

The third post in this series will look at the intersection of accuracy and speed, and suggest a plan for approaching the automated transcription of your unique media data using Faster Whisper in Transana 5.30 and later.

Results – Transcription Speed of Faster Whisper models

Faster Whisper transcription speed measures started after the transcription model was loaded, and ended when the transcript data was returned to the calling routine for processing. Processing speed is reported in seconds elapsed.

The complexity of measuring transcription speed involves taking into consideration the unique characteristics of the data files, the differences between transcription models, and issues related to computer hardware.

Files

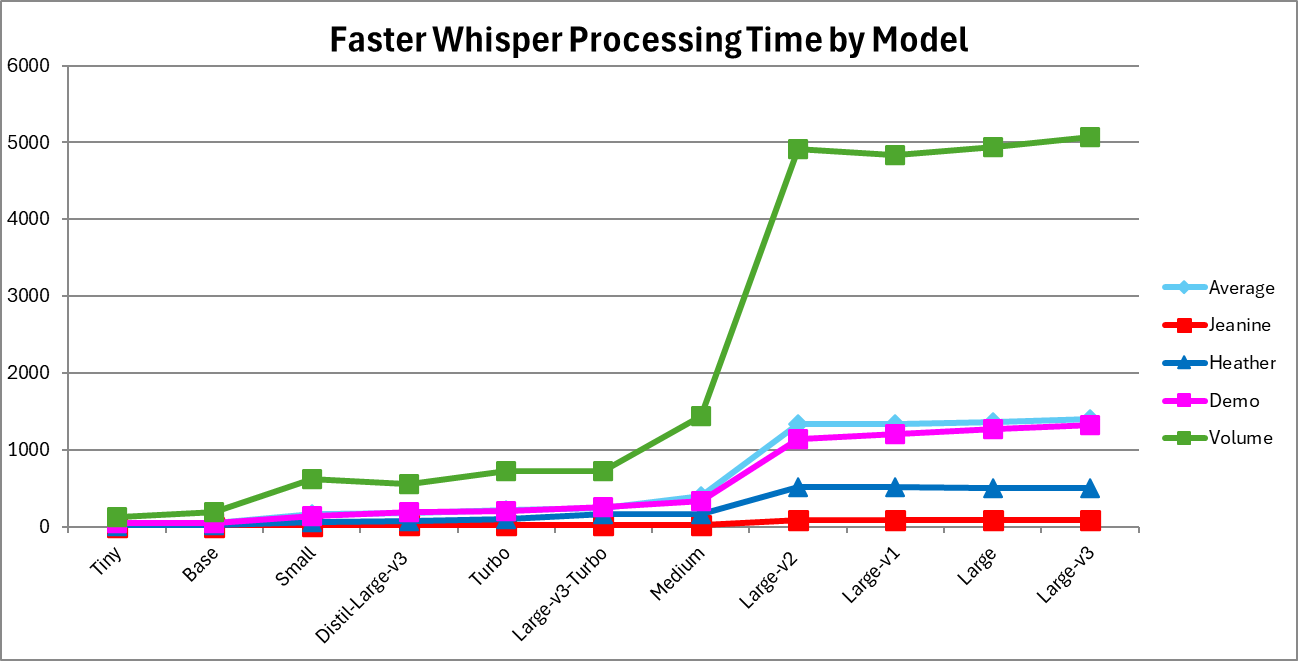

Figure 1

Figure 1 shows the transcription time for each of our four test files averaged across computers with different hardware. Not surprisingly, different models take different amounts of time to process our files, and the longer the media file, the more time automated transcription takes across all models. Historically, there has been an intuitive relationship between model size and processing speed (as seen in the older tiny, base, small, medium, and large models, which we can still see) but newer models like distil-large-v3, large-v3-turbo, and turbo defy that pattern by being considerably faster.

Computer Hardware

There is an anomaly in the analysis of the current data that needs to be factored in when interpreting Figure 1. This becomes clear when we look deeper into the data across the different computers used to measure transcript processing speeds. The three Windows computers used for our testing have very different video cards, leading to very different results when trying to access the video card’s Graphical Processing Unit (GPU) during automated transcription.

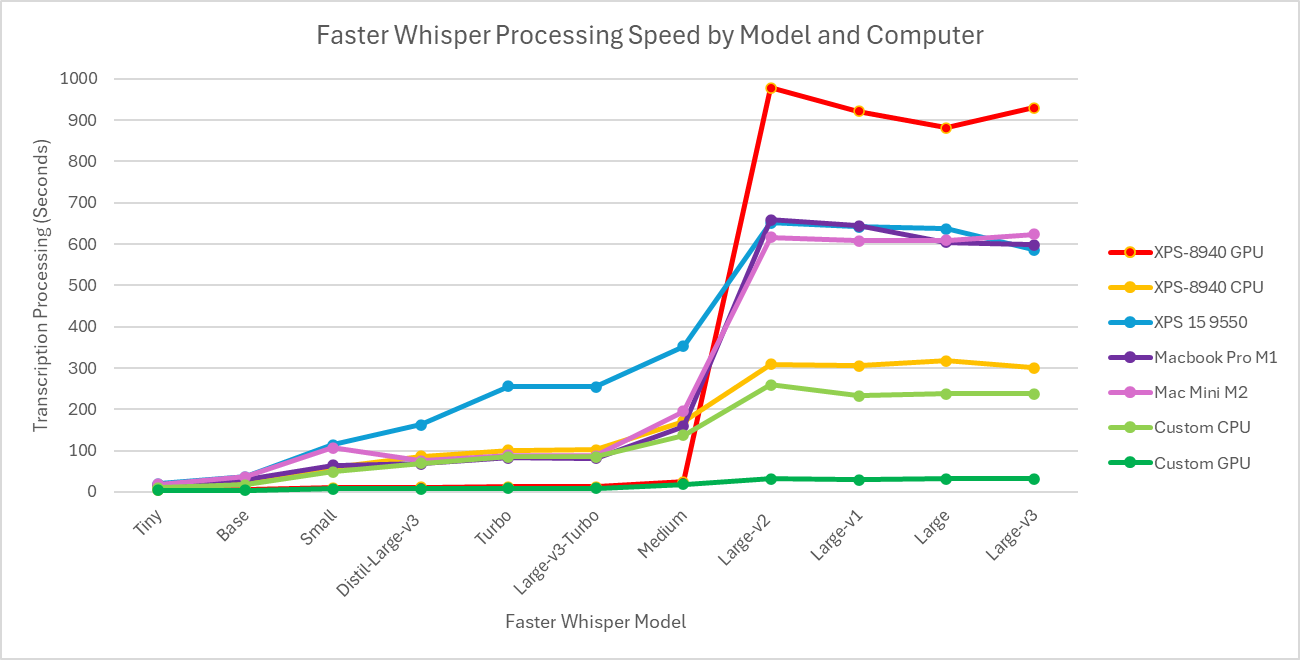

Figure 2

Figure 2 shows automated transcription processing times for a single data file across five computers. Two of these computers have supported GPUs, so the data was collected separately with the GPU and without it. The horizontal axis represents all Faster Whisper transcription models supported by Transana in order of average transcription speed. The vertical axis shows the number of seconds required to create the transcript.

First, let’s consider the two Macs, shown by the light and dark purple lines. Despite using different generations of Apple processors, they show nearly identical performance. Faster Whisper is not able to use GPU processing on Apple processors.

The blue line is a roughly 9 year old Windows computer that does not have a graphics card that Faster Whisper can use. It is a little slower than the Macs for the models in the middle, but shows similar performance with the fastest and slowest models.

Next, let’s look at a new custom-built Windows desktop with a newer NVidia graphics card with 8 GB of dedicated video memory. Faster Whisper processing times using this computer’s GPU are represented by the dark green line and are very fast compared to the other tests for all transcription models. The light green line shows this same computer using Faster Whisper without accessing the GPU. The results from the new computer are not much different from the older computers until we get to the Large-v2 model and the other three large, slow models, where is shows roughly a 15% speed advantage over a computer that is about 5 years older. This shows that a supported, properly configured GPU makes a huge difference in transcription processing time across all models.

Finally, let’s look at the 5-year old XPS 8940 computer, the orange and red lines. We see that the orange line, representing processing without a GPU, is pretty average for the tiny through medium models and is better than all other computers except the new custom built computer for the four largest, slowest models. The red GPU shows the 5-year old GPU provides a significant speed boost for the tiny, base, small, distil-large-v3, turbo, large-v3-turbo, and medium models, but using the GPU actually inhibits processing speed by a considerable amount for the large-v2, large-v1, large, and large-v3 models. The red GPU line actually crosses the orange CPU line, showing slower processing with a GPU than without for the large models. This was quite unexpected.

It turns out the graphics card in that computer is equipped with 6 GB of on-board graphics memory, contrasted with the custom-built computer’s newer graphics card with 8 GB of on-board memory. The four large models that perform poorly require between 6.5 and 7 GB of memory, while the smaller models require less than 6 GB of memory. Faster Whisper’s processing using a GPU takes a big performance hit when the transcription model does not fit in the video card’s on-board memory and divides the model between GPU memory and system memory. For these four large models, we’re significantly better off not using the older 6 GB GPU.

This unusual hardware issue has effectively inflated the speed results for the large, large-v1, large-v2, and large-v3 transcription models in Figure 1. It doesn’t change the order of the processing speed results, but it does affect the magnitude of those results.

Once we understood this issue, we modified Transana’s code to check the amount of memory on the GPU against the transcription model requested. If there is not sufficient memory, Transana now automatically uses CPU processing to avoid the performance problems we discovered when the GPU lacks adequate memory.

Conclusions

This blog post discusses the results of processing speed tests for the 11 available models of Faster Whisper automated transcription. Looking at the relative speeds of the different models available, we see the impact of having a supported and properly configured GPU. The slowest model with a GPU ends up being faster than most of the models without a GPU. But if you don’t have a GPU, or use a Mac where the Apple processor’s GPU is not supported by Faster Whisper, you can affect your processing time with judicious choice of a transcription model.

The need for efficient transcript processing speed for your media files must be balanced with the critical importance of transcription accuracy. The third blog post in this series discusses the interaction between accuracy and processing speed, and suggests an approach to take when you are selecting a Faster Whisper model.